Feb

08

2022

阿里云DSW实例运行LeNet Sample

Step By Step

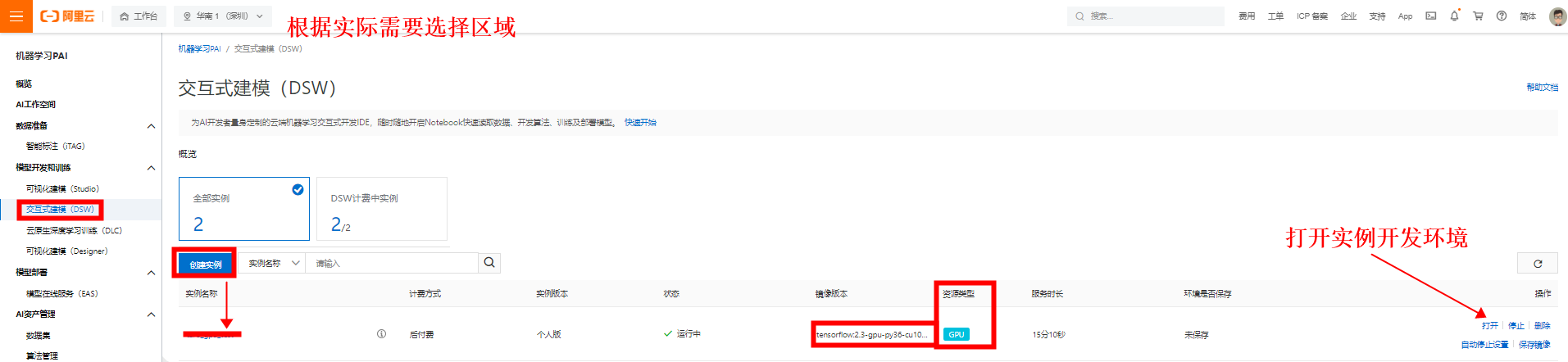

1、创立GPU实例

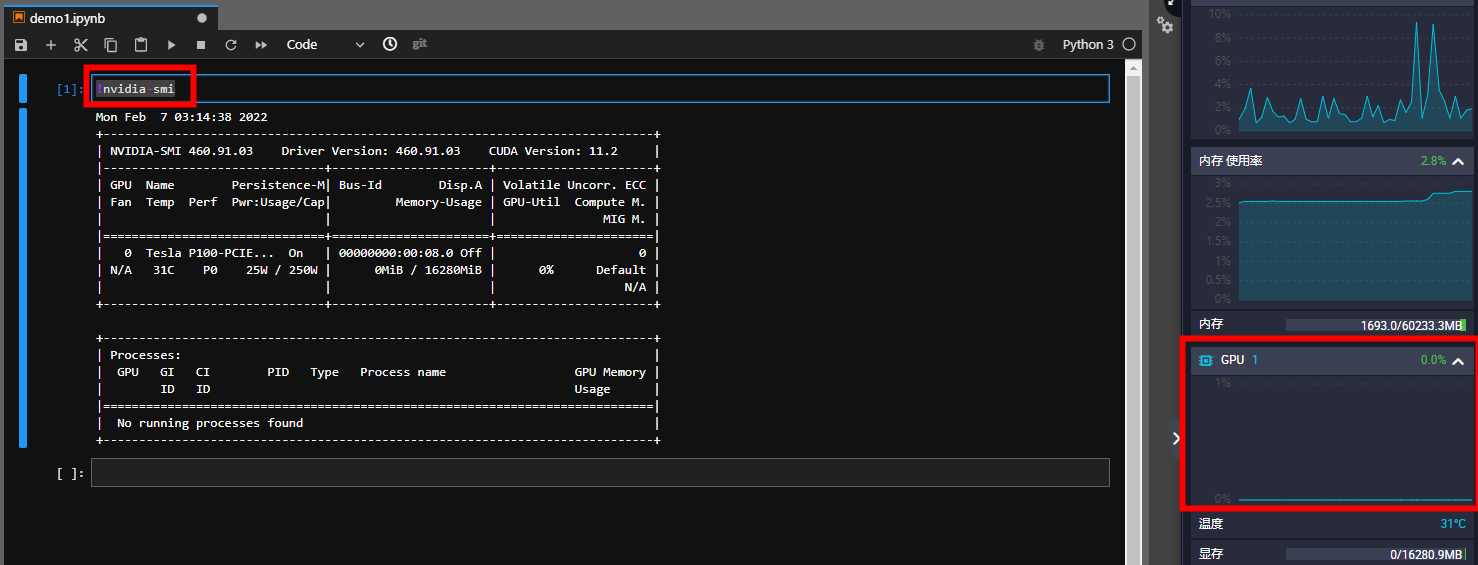

2、检查运用GPU卡状况

3、LetNet Code运转示例

4、显存开释问题

一、创立GPU实例

- 登录DSW控制台

二、检查运用GPU卡状况

!nvidia-smi

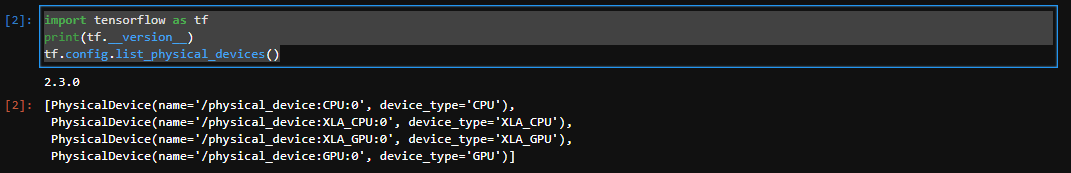

- 检查tf版别及devices信息

import tensorflow as tf

print(tf.__version__)

tf.config.list_physical_devices()

三、LetNet Code运转示例

- 3.1 Code Sample

import tensorflow as tf import matplotlib.pyplot as plt from tensorflow import keras

# 数据预处理函数

def preprocess(x, y):

x = tf.cast(x, dtype=tf.float32) / 255. x = tf.reshape(x, [-1, 32, 32, 1])

y = tf.one_hot(y, depth=10) # one_hot 编码 return x, y

# 加载数据集

(x_train, y_train), (x_test, y_test) = tf.keras.datasets.fashion_mnist.load_data()

# 样本图画周围补0(上下左右均补2个0),将28*28的图画转成32*32的图画

paddings = tf.constant([[0, 0], [2, 2], [2, 2]])

x_train = tf.pad(x_train, paddings)

x_test = tf.pad(x_test, paddings)

train_db = tf.data.Dataset.from_tensor_slices((x_train, y_train))

train_db = train_db.shuffle(10000) # 打乱练习集样本

train_db = train_db.batch(128)

train_db = train_db.map(preprocess)

test_db = tf.data.Dataset.from_tensor_slices((x_test, y_test))

test_db = test_db.shuffle(10000) # 打乱测验集样本

test_db = test_db.batch(128)

test_db = test_db.map(preprocess)

batch = 32 # 创立模型

model = keras.Sequential([

# 卷积层1 keras.layers.Conv2D(6, 5), # 运用6个5*5的卷积核对单通道32*32的图片进行卷积,成果得到6个28*28的特征图

keras.layers.MaxPooling2D(pool_size=2, strides=2), # 对28*28的特征图进行2*2最大池化,得到14*14的特征图

keras.layers.ReLU(), # ReLU激活函数

# 卷积层2 keras.layers.Conv2D(16, 5), # 运用16个5*5的卷积核对6通道14*14的图片进行卷积,成果得到16个10*10的特征图

keras.layers.MaxPooling2D(pool_size=2, strides=2), # 对10*10的特征图进行2*2最大池化,得到5*5的特征图

keras.layers.ReLU(), # ReLU激活函数

# 卷积层3 keras.layers.Conv2D(120, 5), # 运用120个5*5的卷积核对16通道5*5的图片进行卷积,成果得到120个1*1的特征图

keras.layers.ReLU(), # ReLU激活函数

# 将 (None, 1, 1, 120) 的下采样图片拉伸成 (None, 120) 的形状

keras.layers.Flatten(),

# 全衔接层1 keras.layers.Dense(84, activation='relu'), # 120*84 # 全衔接层2 keras.layers.Dense(10, activation='softmax') # 84*10 ])

model.build(input_shape=(batch, 32, 32, 1))

model.summary()

model.compile(optimizer=keras.optimizers.Adam(), loss=keras.losses.CategoricalCrossentropy(), metrics=['accuracy'])

# 练习

history = model.fit(train_db, epochs=50)

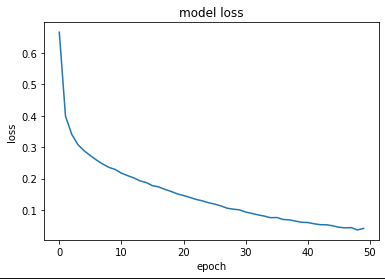

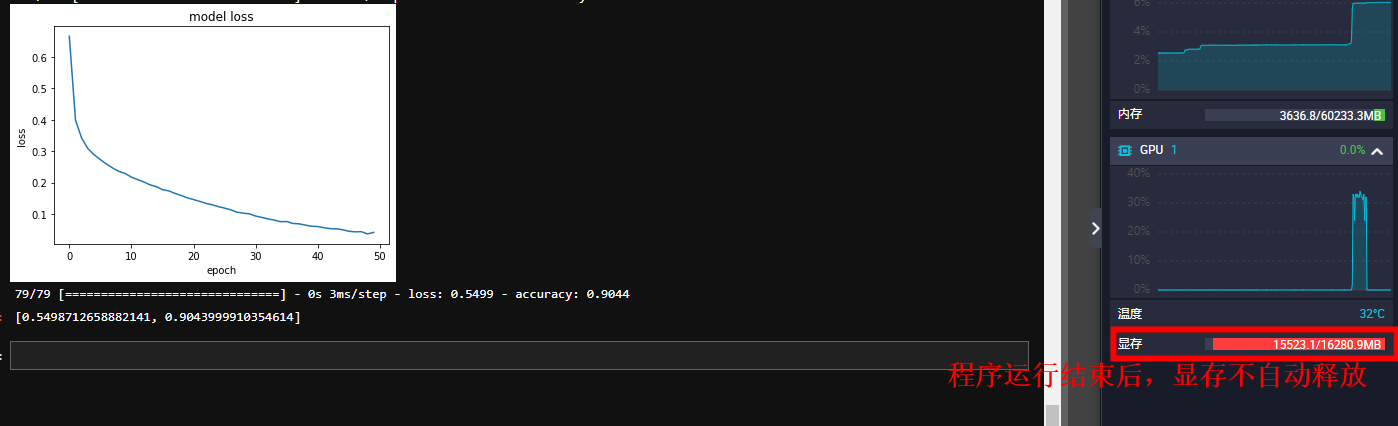

# 损失下降曲线

plt.plot(history.history['loss'])

plt.title('model loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.show()

# 测验

model.evaluate(test_db)

- 运转成果

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/train-labels-idx1-ubyte.gz 32768/29515 [=================================] - 0s 1us/step

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/train-images-idx3-ubyte.gz 26427392/26421880 [==============================] - 1s 0us/step

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/t10k-labels-idx1-ubyte.gz 8192/5148 [===============================================] - 0s 0us/step

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/t10k-images-idx3-ubyte.gz 4423680/4422102 [==============================] - 0s 0us/step Model: "sequential" _________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (32, 28, 28, 6) 156 _________________________________________________________________

max_pooling2d (MaxPooling2D) (32, 14, 14, 6) 0 _________________________________________________________________

re_lu (ReLU) (32, 14, 14, 6) 0 _________________________________________________________________

conv2d_1 (Conv2D) (32, 10, 10, 16) 2416 _________________________________________________________________

max_pooling2d_1 (MaxPooling2 (32, 5, 5, 16) 0 _________________________________________________________________

re_lu_1 (ReLU) (32, 5, 5, 16) 0 _________________________________________________________________

conv2d_2 (Conv2D) (32, 1, 1, 120) 48120 _________________________________________________________________

re_lu_2 (ReLU) (32, 1, 1, 120) 0 _________________________________________________________________

flatten (Flatten) (32, 120) 0 _________________________________________________________________

dense (Dense) (32, 84) 10164 _________________________________________________________________

dense_1 (Dense) (32, 10) 850 =================================================================

Total params: 61,706 Trainable params: 61,706 Non-trainable params: 0 _________________________________________________________________

Epoch 1/50 469/469 [==============================] - 2s 4ms/step - loss: 0.6662 - accuracy: 0.7553 Epoch 2/50 469/469 [==============================] - 2s 4ms/step - loss: 0.3988 - accuracy: 0.8569 Epoch 3/50 469/469 [==============================] - 2s 4ms/step - loss: 0.3414 - accuracy: 0.8751 Epoch 4/50 469/469 [==============================] - 2s 4ms/step - loss: 0.3081 - accuracy: 0.8861 Epoch 5/50 469/469 [==============================] - 2s 4ms/step - loss: 0.2888 - accuracy: 0.8938 Epoch 6/50 469/469 [==============================] - 2s 4ms/step - loss: 0.2733 - accuracy: 0.8997 Epoch 7/50 469/469 [==============================] - 2s 4ms/step - loss: 0.2590 - accuracy: 0.9039 Epoch 8/50 469/469 [==============================] - 2s 4ms/step - loss: 0.2464 - accuracy: 0.9078 Epoch 9/50 469/469 [==============================] - 2s 4ms/step - loss: 0.2358 - accuracy: 0.9126 Epoch 10/50 469/469 [==============================] - 2s 4ms/step - loss: 0.2292 - accuracy: 0.9155 Epoch 11/50 469/469 [==============================] - 2s 4ms/step - loss: 0.2175 - accuracy: 0.9190 Epoch 12/50 469/469 [==============================] - 2s 4ms/step - loss: 0.2096 - accuracy: 0.9227 Epoch 13/50 469/469 [==============================] - 2s 4ms/step - loss: 0.2022 - accuracy: 0.9239 Epoch 14/50 469/469 [==============================] - 2s 4ms/step - loss: 0.1929 - accuracy: 0.9284 Epoch 15/50 469/469 [==============================] - 2s 4ms/step - loss: 0.1875 - accuracy: 0.9301 Epoch 16/50 469/469 [==============================] - 2s 4ms/step - loss: 0.1776 - accuracy: 0.9342 Epoch 17/50 469/469 [==============================] - 2s 4ms/step - loss: 0.1737 - accuracy: 0.9349 Epoch 18/50 469/469 [==============================] - 2s 4ms/step - loss: 0.1658 - accuracy: 0.9379 Epoch 19/50 469/469 [==============================] - 2s 4ms/step - loss: 0.1592 - accuracy: 0.9407 Epoch 20/50 469/469 [==============================] - 2s 4ms/step - loss: 0.1515 - accuracy: 0.9438 Epoch 21/50 469/469 [==============================] - 2s 4ms/step - loss: 0.1461 - accuracy: 0.9445 Epoch 22/50 469/469 [==============================] - 2s 4ms/step - loss: 0.1403 - accuracy: 0.9467 Epoch 23/50 469/469 [==============================] - 2s 4ms/step - loss: 0.1340 - accuracy: 0.9487 Epoch 24/50 469/469 [==============================] - 2s 4ms/step - loss: 0.1295 - accuracy: 0.9506 Epoch 25/50 469/469 [==============================] - 2s 4ms/step - loss: 0.1235 - accuracy: 0.9536 Epoch 26/50 469/469 [==============================] - 2s 4ms/step - loss: 0.1189 - accuracy: 0.9545 Epoch 27/50 469/469 [==============================] - 2s 4ms/step - loss: 0.1134 - accuracy: 0.9571 Epoch 28/50 469/469 [==============================] - 2s 4ms/step - loss: 0.1059 - accuracy: 0.9602 Epoch 29/50 469/469 [==============================] - 2s 4ms/step - loss: 0.1028 - accuracy: 0.9615 Epoch 30/50 469/469 [==============================] - 2s 4ms/step - loss: 0.1006 - accuracy: 0.9616 Epoch 31/50 469/469 [==============================] - 2s 4ms/step - loss: 0.0936 - accuracy: 0.9644 Epoch 32/50 469/469 [==============================] - 2s 4ms/step - loss: 0.0892 - accuracy: 0.9663 Epoch 33/50 469/469 [==============================] - 2s 4ms/step - loss: 0.0846 - accuracy: 0.9683 Epoch 34/50 469/469 [==============================] - 2s 4ms/step - loss: 0.0807 - accuracy: 0.9692 Epoch 35/50 469/469 [==============================] - 2s 4ms/step - loss: 0.0756 - accuracy: 0.9711 Epoch 36/50 469/469 [==============================] - 2s 4ms/step - loss: 0.0763 - accuracy: 0.9718 Epoch 37/50 469/469 [==============================] - 2s 4ms/step - loss: 0.0700 - accuracy: 0.9732 Epoch 38/50 469/469 [==============================] - 2s 4ms/step - loss: 0.0687 - accuracy: 0.9748 Epoch 39/50 469/469 [==============================] - 2s 4ms/step - loss: 0.0648 - accuracy: 0.9754 Epoch 40/50 469/469 [==============================] - 2s 4ms/step - loss: 0.0611 - accuracy: 0.9771 Epoch 41/50 469/469 [==============================] - 2s 4ms/step - loss: 0.0602 - accuracy: 0.9774 Epoch 42/50 469/469 [==============================] - 2s 4ms/step - loss: 0.0564 - accuracy: 0.9793 Epoch 43/50 469/469 [==============================] - 2s 4ms/step - loss: 0.0533 - accuracy: 0.9807 Epoch 44/50 469/469 [==============================] - 2s 4ms/step - loss: 0.0531 - accuracy: 0.9803 Epoch 45/50 469/469 [==============================] - 2s 4ms/step - loss: 0.0499 - accuracy: 0.9809 Epoch 46/50 469/469 [==============================] - 2s 4ms/step - loss: 0.0452 - accuracy: 0.9827 Epoch 47/50 469/469 [==============================] - 2s 4ms/step - loss: 0.0433 - accuracy: 0.9847 Epoch 48/50 469/469 [==============================] - 2s 4ms/step - loss: 0.0439 - accuracy: 0.9838 Epoch 49/50 469/469 [==============================] - 2s 4ms/step - loss: 0.0367 - accuracy: 0.9864 Epoch 50/50 469/469 [==============================] - 2s 4ms/step - loss: 0.0415 - accuracy: 0.9847 79/79 [==============================] - 0s 3ms/step - loss: 0.5499 - accuracy: 0.9044 [0.5498712658882141, 0.9043999910354614]

四、显存开释问题

- 4.1 问题现象

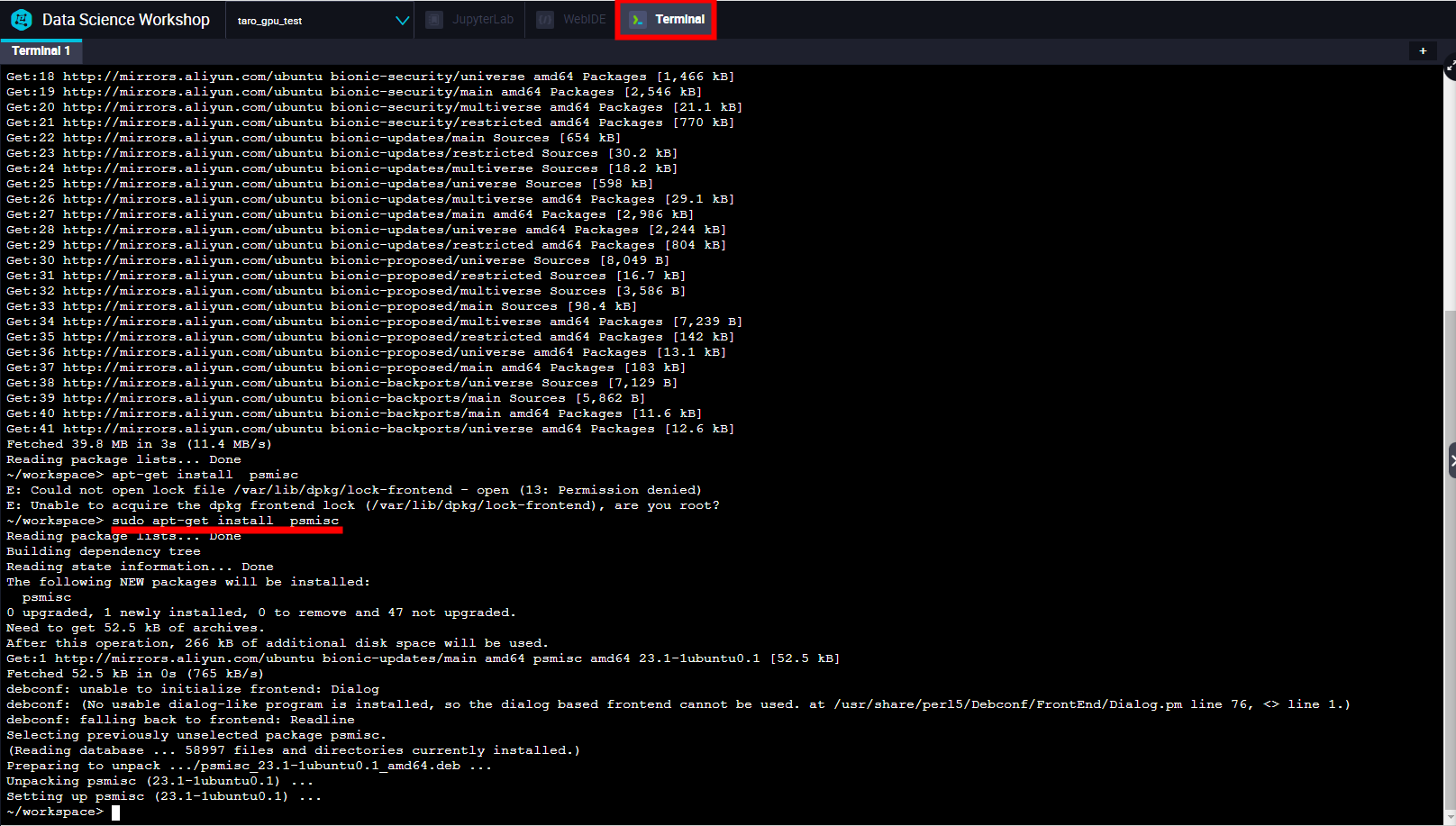

- 4.2 Terminal fuser安装

sudo apt-get update

sudo apt-get install psmisc

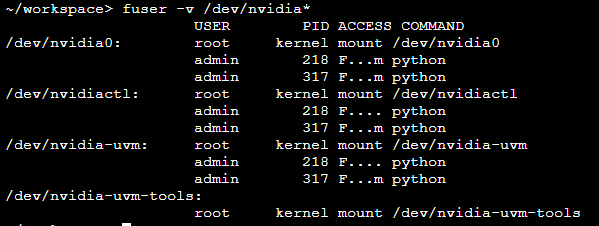

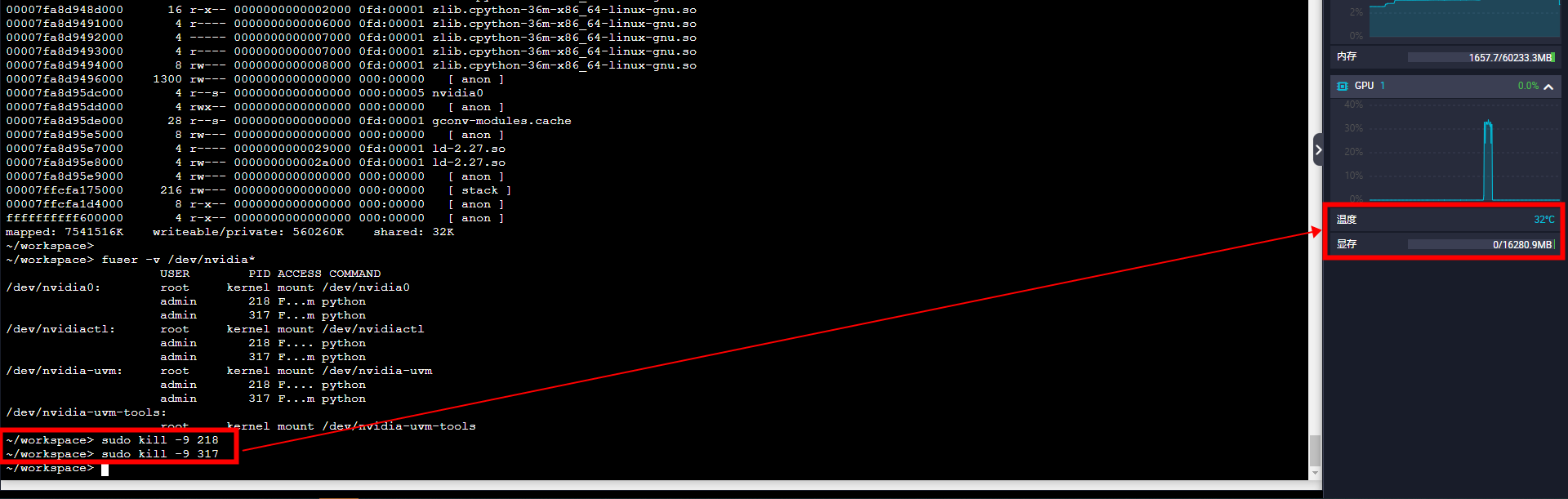

- 4.3 检查僵尸进程

fuser -v /dev/nvidia*

- 4.4 检查具体这个进程调用GPU的状况

pmap -d PID

- 4.5 强行关掉所有当时并未履行的僵尸进程

sudo kill -9 PID

更多参考

处理GPU显存未开释问题

Ubuntu:fuser command not found

TF Implementing LeNet-5 in TensorFlow 2.0

本公司销售:阿里云新/老客户,只要购买阿里云,即可享受折上折优惠!>

分类:

分类: 已被围观

已被围观

我有话说: